Over the last few years, I shared in this blog about multiple objects and method to track and trend Opportunity data in Salesforce:

Trending with Reporting Snapshot

This is a method to capture the trend of Opportunity data by creating a snapshot report, and daily push the result into a custom object. After a while, we will have data to analyze the Opportunity trend based on data stored in the custom object.

Enable Feed Tracking versus Set History Tracking

The idea in this blog is to introduce

OpportunityFeed (prefix

0D5 - FeedItem) and

OpportunityFieldHistory (prefix

017 - Entity History) object.

Once Opportunity is enabled for

Feed Tracking, when users make changes on a few fields in one transaction, it will always create only "one" record in

OpportunityFeed. For a standard object, Salesforce will create a new object end with "Feed", and for a custom object, the object API name will end with "__Feed".

While using

Set History Tracking, Saleforce will create a number of records in the

OpportunityFieldHistory object, based on the number of fields changed, even it happened in one transaction.

Salesforce Opportunity History (Stage History)

This blog is explaining the difference between

OpportunityFieldHistory (prefix

017 - Entity History) and

OpportunityHistory (prefix

008 - OpportunityHistory) object.

Normally, when

Set History Tracking is enabled to an object, Salesforce will create a new object with the suffix "

History" for a standard object or "

__History" for a custom object.

However, for Opportunity, instead of called as "OpportunityHistory" object, field tracking for Opportunity is called as OpportunityFieldHistory. The reason, simply because the API name of the OpportunityHistory object has been used for

Stage History.

Opportunity Stage Aging

This blog is sharing on using "Opportunity History" report type to get the detail of the changes:

- Stage Name

- Amount

- Probability

- Close Date.

Using "Opportunity History" report type, we can get information:

- From Stage

- To Stage

- Stage Change

(Y/N)

- Stage Duration

- Last Stage Change Date

Stage Duration in Opportunity History report type will count the number of days an opportunity is in a particular sales stage. While in Opportunity report type,

Stage Duration is the number of days from the date when opportunity change to current Stage until

today and the number of days keep counting even for the closed opportunity.

Summary:

So far we have discussed a few objects related to Opportunity tracking:

OpportunityHistory

Prefix: 008

Label: Opportunity History

Description: this object store Opportunity Stage detail and used by Opportunity History report type

OpportunityFieldHistory

Prefix: 017

Label: Opportunity Field History

Description: this object store field value changes when History Tracking is enabled for Opportunity

OpportunityFeed

Prefix: 0D5

Label: Opportunity Feed

Description: similar to OpportunityFieldHistory, this object store feed tracking for Opportunity when Feed Tracking is enabled for Opportunity

Next, we are going to introduce another feature called

Historical Trending

API Name:

Opportunity__hd

Prefix: hxx

Label: Opportunities (Historical)

Description: this object store value of Opportunity fields enabled for historical trending, including the before and after values.

Once Historical Trending is enabled, following actions will be triggered:

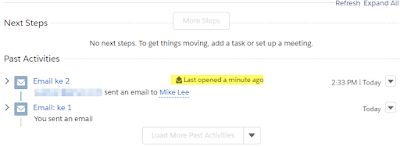

Email

You will get an email from noreply@salesforce.com, this email will tell you that your historical trending data is ready.

New Object

Salesforce will create a

new object, for a standard object, it will end with "

__hd" e.g.

Opportunity__hd, and for a custom object, it will end with "

_hd" e.g.

Object1__c_hd

Since this is considered custom objects, there will be no default prefix, but it will start with hxx, example h00 for the 1st object enabled for Historical Trending, the 2nd object would be h01, and etc.

Report Type

Salesforce will create a custom report type end with "with Historical Trending", e.g.:

- for Opportunity, it will be called "Opportunities with Historical Trending"

- for other objects, it will be called e.g. "Object1 with Historical Trending"

With this report type, you can compare data for up to five points in time (snapshots) within the previous

three full months and all days so far in the current month.

Let us compare between the report and object query. I'll use Opportunity for Historical Trending. Special case for Opportunity: the

Amount,

Close Date,

Forecast Category,

Probability and

Stage fields are always trended, but you can add more fields as required for trending.

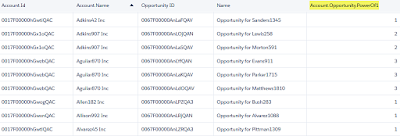

The following report is created with

Opportunities with Historical Trending report type and filter with Close Date within 2018.

* today date = 3 Dec 2018

For this sample, I will query with filter on 2 opportunities shown as above report:

SELECT ParentId, CreatedDate, ValidFromDate, ValidToDate, Amount__hpr, Amount__hst, CloseDate__hpr, CloseDate__hst, StageName__hpr, StageName__hst FROM Opportunity__hd WHERE ParentId IN ('0065000000Tmjwh','0065000000PWibH') ORDER BY ParentId, CreatedDate DESC

Note:

- For each field enable for trending, you will found _hpr and _hst fields

- _hpr = value at the start of the Date time range

- _hst = value at the end of the Date time range

There are a few things we can learn by query Opportunity__hd and the relation with the above report:

- ValidFromDate will determine the values in the trending slot

- If the value changed more than once in a day, only the last value of the day will be shown in the report, in this example: line 3 - $15,000.00 is never shown in the report because it is overwritten to $22,000.00 within the same day.

- Report filter is based on the current data

- The date return in the query is GMT, while the report will be based on the user time zone

Reference: